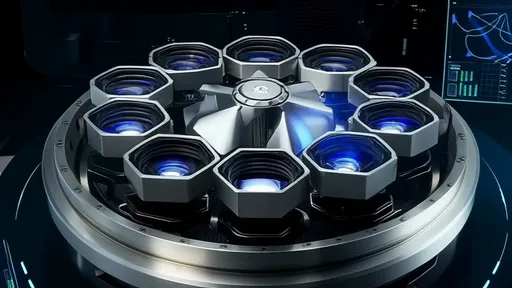

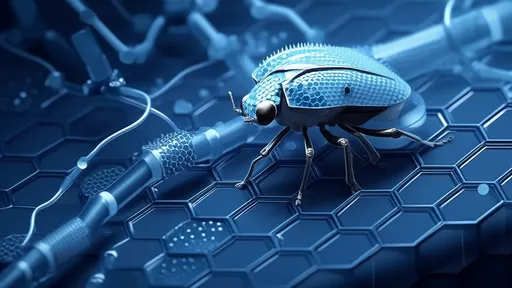

The field of autonomous navigation and advanced surveillance has witnessed a groundbreaking innovation with the advent of compound eye LiDAR systems. Inspired by the multifaceted vision of insects, these next-generation panoramic imaging solutions are redefining how machines perceive and interact with their environments. Unlike traditional single-lens or rotating LiDAR setups, this bio-inspired technology captures ultra-wide-field 3D data with unprecedented efficiency, opening new frontiers in robotics, augmented reality, and smart city infrastructure.

At the heart of this revolution lies the biomimetic design principle. Just as a dragonfly's compound eye processes multiple visual inputs simultaneously through thousands of ommatidia, the artificial counterpart integrates hundreds of micro-laser emitters and receivers into a compact hemispherical array. This architecture eliminates mechanical scanning components that plague conventional systems with reliability issues, while achieving a full 180-degree vertical and 360-degree horizontal field of view. Early adopters report a 40% reduction in power consumption compared to spinning LiDAR units, coupled with sub-centimeter depth resolution at 30-meter ranges.

Military applications have driven initial development cycles, where the system's ability to detect low-flying drones through foliage has proven invaluable. However, the technology's migration into civilian sectors is accelerating. Automotive engineers particularly praise the absence of blind spots during urban driving scenarios. "When mounted at a vehicle's four corners, these units create a protective bubble of awareness that even accounts for curbside children or cyclists in adjacent lanes," explains Dr. Helena Reinhart, lead researcher at the Munich Institute of Photonics. Her team recently demonstrated how the system's 20Hz refresh rate can track 200+ moving objects simultaneously - a critical capability for autonomous taxis navigating crowded intersections.

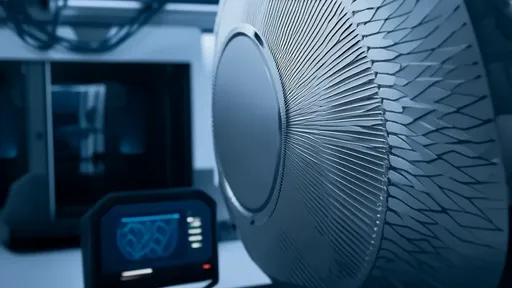

The manufacturing breakthrough enabling this leap forward involves wafer-level optics fabrication. Semiconductor-style batch production allows etching thousands of microscopic laser diodes and corresponding receivers onto curved substrates. Tokyo-based Nippon Optronics holds key patents for the hybrid III-V/silicon integration process that maintains beam coherence across the array. Their latest consumer-grade model fits within a hockey puck form factor while delivering 0.1° angular resolution - surpassing human binocular vision in certain parameters.

Challenges remain in computational processing. The raw data stream from a single compound eye LiDAR can exceed 8Gbps, necessitating novel edge computing architectures. Startups like Boston's Cerebra Technologies have developed neuromorphic processors that mimic the optical lobe of insects, filtering irrelevant background noise while prioritizing moving threats. Their field-programmable gate arrays implement selective attention algorithms, reducing bandwidth requirements by 90% without sacrificing critical detail.

Environmental robustness sets these systems apart from camera-based alternatives. During recent Arctic trials, a prototype unit maintained functionality at -40°C while conventional cameras failed. The laser array's ability to penetrate moderate snowfall and its immunity to glare make it ideal for maritime navigation systems. "We're seeing shipping companies adopt this for autonomous container vessels," notes industry analyst Mark Williams. "The panoramic hazard detection prevents collisions with iceberg fragments that radar sometimes misses."

Ethical debates have emerged regarding surveillance potential. The technology's combination of long-range facial recognition (through unique gait analysis) and 3D environment mapping raises privacy concerns. Regulatory bodies in the EU have proposed geofencing restrictions for public space deployments, while mandating visible spectrum indicators when systems are active. Manufacturers counter that the same features enable life-saving search-and-rescue applications, such as locating earthquake survivors in rubble where thermal cameras prove ineffective.

Looking ahead, researchers are exploring multi-spectral compound vision by integrating terahertz and ultraviolet channels. This could enable material identification at distance - distinguishing between a cardboard box and metallic weapon, for instance. The Defense Advanced Research Projects Agency (DARPA) is funding development of self-healing micro-lens arrays that maintain functionality despite partial damage. As production scales, analysts project the $1.2 billion compound eye LiDAR market will grow tenfold by 2030, potentially making single-lens systems obsolete for many applications.

The convergence of biology-inspired design and photonic innovation continues to yield surprises. Last month, a joint Harvard-MIT team published findings on dynamic focal length adjustment, mimicking how certain insects change optical parameters between individual ommatidia. This could lead to systems that simultaneously achieve wide-field surveillance and zoom capabilities without moving parts. Such advancements suggest we're merely scratching the surface of what compound eye LiDAR technology can ultimately achieve in reshaping machine perception.

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025

By /Aug 14, 2025